The Critical Role of Sensors in Computer Vision and Robotics

- Liz Gibson

- May 1

- 3 min read

Updated: Aug 8

As artificial intelligence and robotics continue to evolve, the importance of sensors in computer vision has never been more apparent. These sensors serve as the eyes and, in some cases, the ears of intelligent machines, feeding the raw data that AI algorithms need to interpret the physical world and make decisions in real time.

From autonomous vehicles to warehouse robots and inspection systems, sensors enable machines to perceive, analyze, and interact with their surroundings accurately and efficiently. Without them, even the most advanced computer vision models would be blind.

Understanding the Role of Sensors in Computer Vision

Sensors in computer vision are the hardware components responsible for capturing environmental data. These sensors collect different types of input—visual, thermal, spatial, and motion—which are then processed by AI and machine learning algorithms to guide robotic behavior or automate decision-making.

Key sensor types include:

RGB Cameras

Standard color cameras provide 2D image data. They’re commonly used in applications like object detection, facial recognition, and barcode scanning. However, their limitation is that they offer no depth perception.

Depth Sensors

Depth sensors combine visual information with distance data, allowing robots and computer vision systems to understand spatial relationships. This is essential in tasks like gesture recognition, 3D reconstruction, and navigation in cluttered environments.

LiDAR (Light Detection and Ranging)

LiDAR uses laser pulses to measure distances between the sensor and surrounding objects, creating precise 3D maps. It’s critical for autonomous driving, drones, and industrial robots that need to operate in dynamic, unstructured settings.

Infrared and Thermal Sensors

These sensors detect heat signatures, enabling applications in security, search and rescue, and medical diagnostics. They’re especially valuable in low-visibility conditions where RGB cameras are ineffective.

Sensor Fusion: Combining Strengths for Smarter Perception

The real power of sensors in computer vision emerges when different sensor types are used together—a concept known as sensor fusion. By combining multiple data streams, systems gain a more comprehensive and accurate understanding of their environment.

For example:

A delivery drone might use LiDAR for mapping terrain, GPS for location tracking, and RGB cameras for visual confirmation of drop-off points.

A healthcare robot may use thermal sensors to detect body temperature, alongside a depth sensor for safe navigation around patients.

Sensor fusion not only enhances accuracy but also enables redundancy—crucial in safety-critical applications like autonomous vehicles and robotic surgery.

Real-World Applications Across Industries

The integration of advanced sensors is driving innovation across multiple sectors:

Autonomous Vehicles

Self-driving cars rely on a suite of sensors—LiDAR, radar, cameras, and ultrasonic sensors—to detect obstacles, read road signs, and make split-second driving decisions.

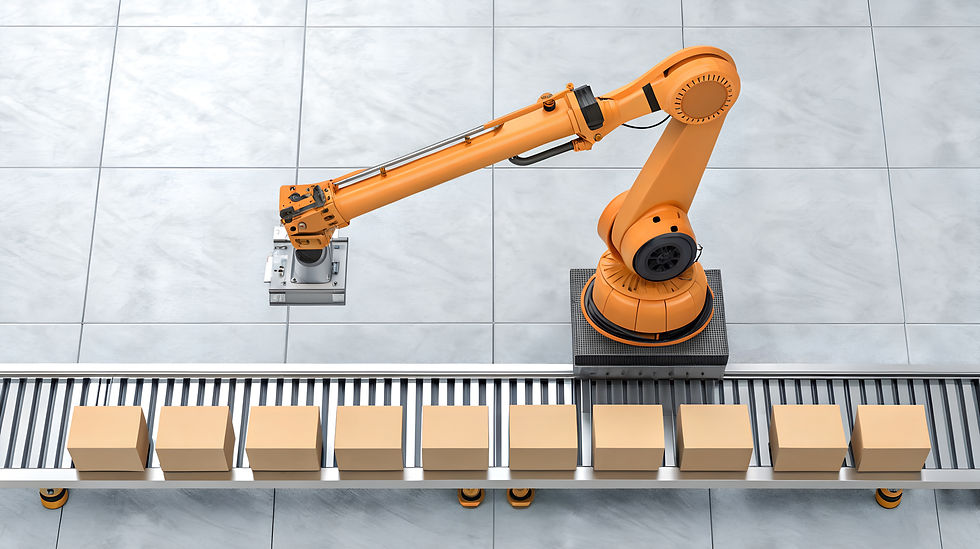

Smart Manufacturing

Robotic arms in manufacturing use vision sensors to inspect product quality, identify parts, and guide precise movements without human intervention.

Aerial Drones

Equipped with depth and motion sensors, drones can fly autonomously, avoid collisions, and gather high-resolution imaging for agriculture, construction, and mapping.

Healthcare

Computer vision powered by multi-sensor input is enabling contactless patient monitoring, early disease detection, and more responsive assistive technologies.

The Future of Sensors in Computer Vision

As sensor technology becomes more compact, affordable, and powerful, the capabilities of computer vision systems will continue to expand. Higher resolution, greater depth accuracy, and better data synchronization will make AI-powered systems even more capable in dynamic environments.

From robotics to remote sensing, the strategic use of sensors in computer vision will be central to unlocking the next wave of AI-driven automation, safety, and intelligence.